Project

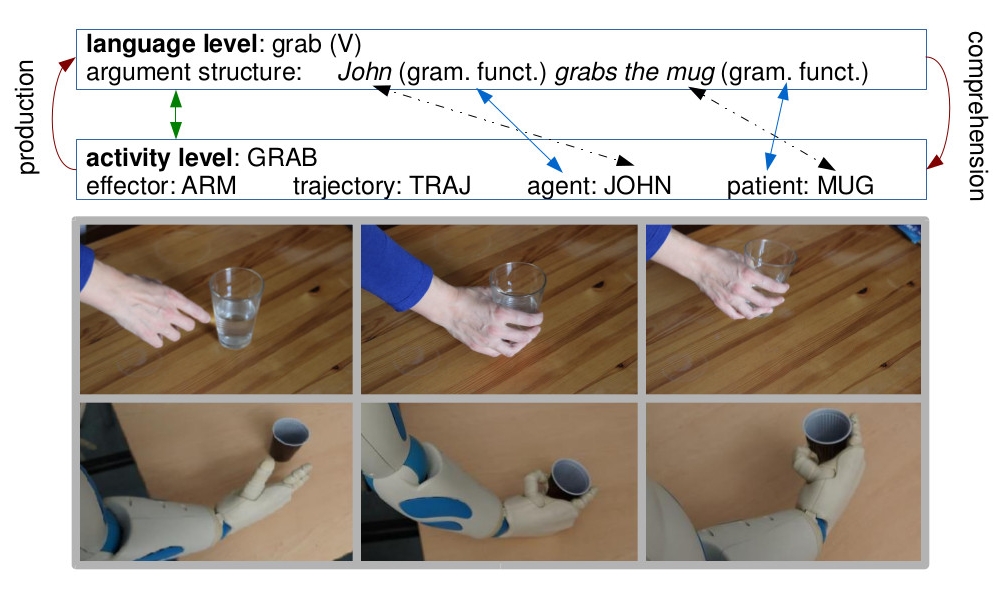

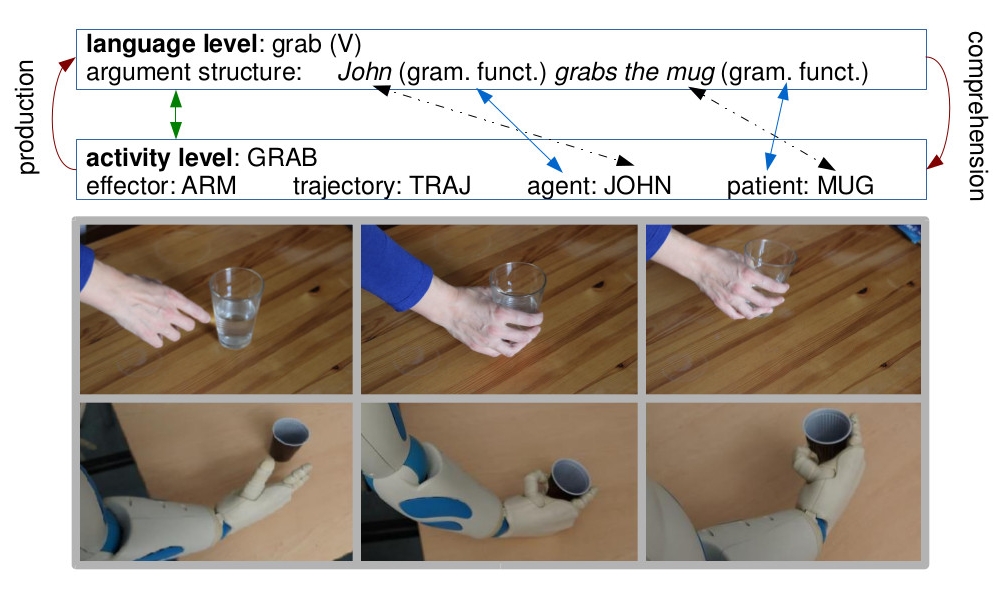

Future social robots will need the ability to acquire new tasks and behaviors on the job both through observation and through natural language instruction, for robot designers cannot build in all environmental and task contingencies in typical application domains such as health care settings or people’s homes. In this project, we tackle the critical subproblem of learning new actions and their corresponding words by the artificial system observing how those actions are performed and expressed by humans. As a result, robots will not only understand action concepts, but will also be able to learn and execute actions, and talk about them. We propose to develop psychologically plausible observation and experimentation-based algorithms for action verb learning that are deeply integrated with natural language understanding and generation. The algorithms will allow the robot to observe and interpret human actions, and distill from them constitutive aspects such as the motion trajectories and involved objects. At the same time, the robot will also be able to parse a concomitant utterance describing the action and thus relate syntactic elements of the verbal description to semantic elements in the action representation. By aligning syntactic roles and semantic types, the robot then can build an association between verb phrase structure and action argument types. The outcome of the project will thus contribute an important, currently missing part for future social robots.

Coordinator

Stephanie Gross

Austrian Research Institute for Artificial Intelligence (OFAI)

Freyung 6/6

1010, Vienna

Austria

stephanie.gross@ofai.at

http://www.ofai.at/~stephanie.gross/